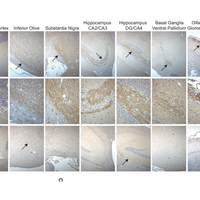

ABOVE: Antibody labeling of C9ORF72 in the mouse brain

FROM FIGURE 5, C. LAFLAMME ET AL., ELIFE, 8:E48363, 2019

Medical science has a problem, and everyone knows it.

Imagine driving a car with a navigation system that is right just half the time, or doing math with a calculator that knows only half the multiplication table. It’s simply not rational, yet scientists are doing something similar when we use antibodies in research.

When correctly applied, antibodies are stunningly accurate. They can detect one protein out of tens of thousands in a sample.

The problem is that many, perhaps more than half of commercially available antibodies, do not target the protein their manufacturers claim they do, or they recognize the intended target but also cross-react with non-intended targets.

As we worked on our C9ORF72 paper, it became less about one gene and more about a template other labs can...

Ill-defined antibodies contribute significantly to a lack of reproducibility in important research efforts including preclinical studies, with estimates that up to 90 percent of a select group of 53 landmark preclinical studies suffered from such flaws.

My lab recently developed a procedure that allows us to quickly and relatively cheaply validate antibodies by comparing control cells expressing the target to identical cells in which the target protein has been selectively deleted. We used this approach to test 16 commercially available antibodies that supposedly detect the protein product of the ALS-related gene C9orf72.

Of the 16 antibodies, two recognized C9ORF72 specifically on immunoblot, and only one was able to capture the majority of the protein in immunoprecipitation. That same antibody was the only one that recognized C9ORF72 specifically in immunofluorescence. In each case, the antibody specifically recognized C9ORF72 in parental cells and the signal was completely lost in the cells where C9ORF72 had been selectively depleted. After studying the literature, we found none of these three antibodies had been used in any published scientific papers before ours.

If you read the published papers about C9ORF72, you will see drastically different conclusions about the expression and cellular localization of C9ORF72. I believe that this inconsistency is at least partly due to the use of antibodies that do not detect the protein they are supposed to detect.

The fact that antibodies often don’t work will not come as a shock to scientists like myself who use them in research. It’s a commonly understood fact, and an accepted risk of using them. We do not demand better antibodies from manufacturers, who charge about $500 per sample. One estimate is that scientists waste approximately $350 million per year in the US alone on antibodies that don’t work.

As we worked on our C9ORF72 paper, it became less about one gene and more about a template other labs can use to validate antibodies. The procedures we use are not revolutionary, and in fact this makes our approach widely applicable to any laboratory skilled in the art, yet to my knowledge this is one of the first papers to describe a streamlined process for antibody validation. The paper is published in the open-access journal eLife for anyone to read ( Following these experiments, I became a consultant for GeneTex, one of the companies whose antibodies were tested in the study, and my role applies to new antibodies not reported in the study.).

One paper will not fix the problem. Change will only happen when scientists, granting agencies, scientific journals, and manufacturers demand better antibodies. Validation costs money, but as it becomes standard, costs will drop, and ultimately labs will save money by avoiding antibodies that don’t work. We can build an open database where anyone can go and see which antibody has been validated for a particular protein. Over time, validation will become easier and more cost-effective, to the point where it will be expected, if not demanded, by funding agencies and journal editors.

It’s well known that the sciences, even the “hard” sciences that include cell biology, are facing a reproducibility crisis. In a 2016 survey by Nature, 70 percent of scientists said they were not able to replicate the results of previous experiments. When they tried to reproduce the results of their own experiments, the success rate rose to a mediocre 50 percent. This simply isn’t good enough. Millions of dollars and thousands of hours of work are being wasted on research that is not reliable. The reproducibility crisis means less fundamental research can bridge the gap between theory and practical applications that benefit humanity, especially patients suffering from diseases for which we have no cures.

This is particularly true in the case of neurological disease, where the complexity of the brain makes progress difficult. In 2017, the US Food and Drug Administration approved edaravone, the first ALS drug approved in 22 years. Development of drugs for Parkinson’s disease has also been slow.

A large part of the reproducibility crisis is because of poor antibody validation. We owe it to patients to do better.

Peter S. McPherson is a James McGill Professor of Neurology and Neurosurgery and Anatomy and Cell Biology at The Neuro (Montreal Neurological Institute and Hospital) of McGill University, where he is the Director of the Neurodegenerative Disease Research Group. He is also a Fellow of the Royal Society of Canada.

Interested in reading more?